May 22, 2024

How to add a robots.txt file to your website?

Access the Robot.txt Generator Plugin

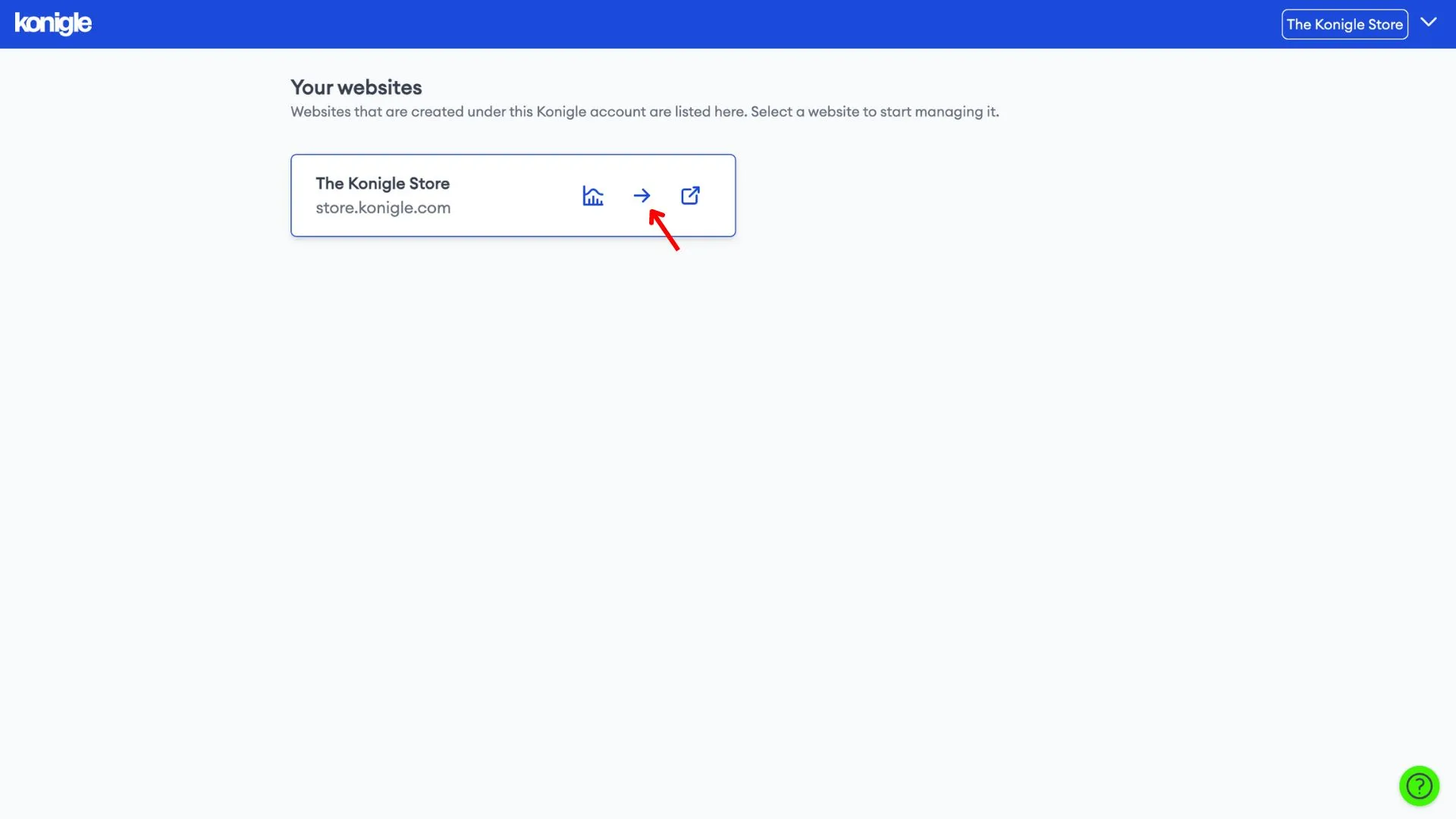

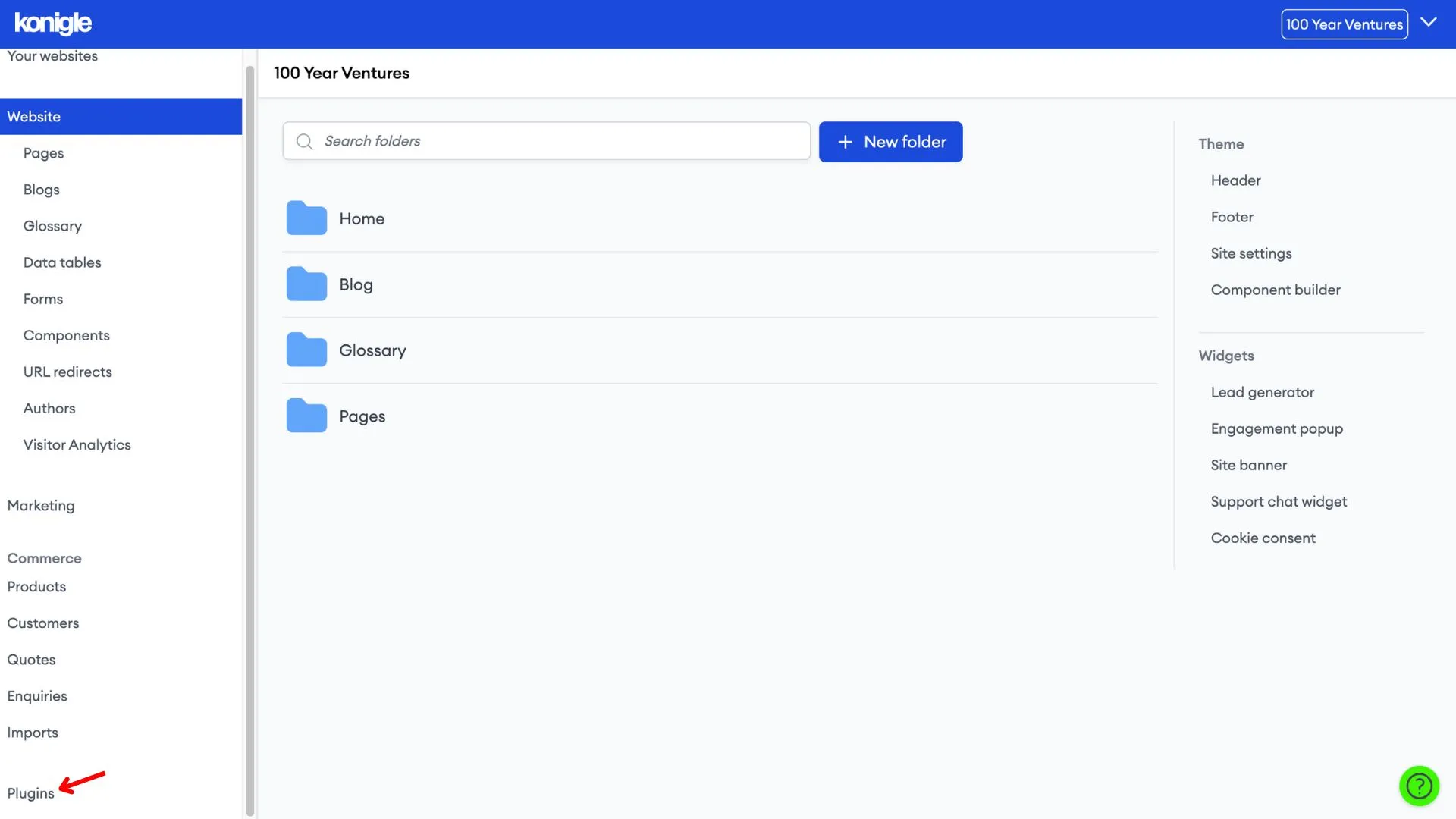

1. Select the website you wish to edit and go to plugins.

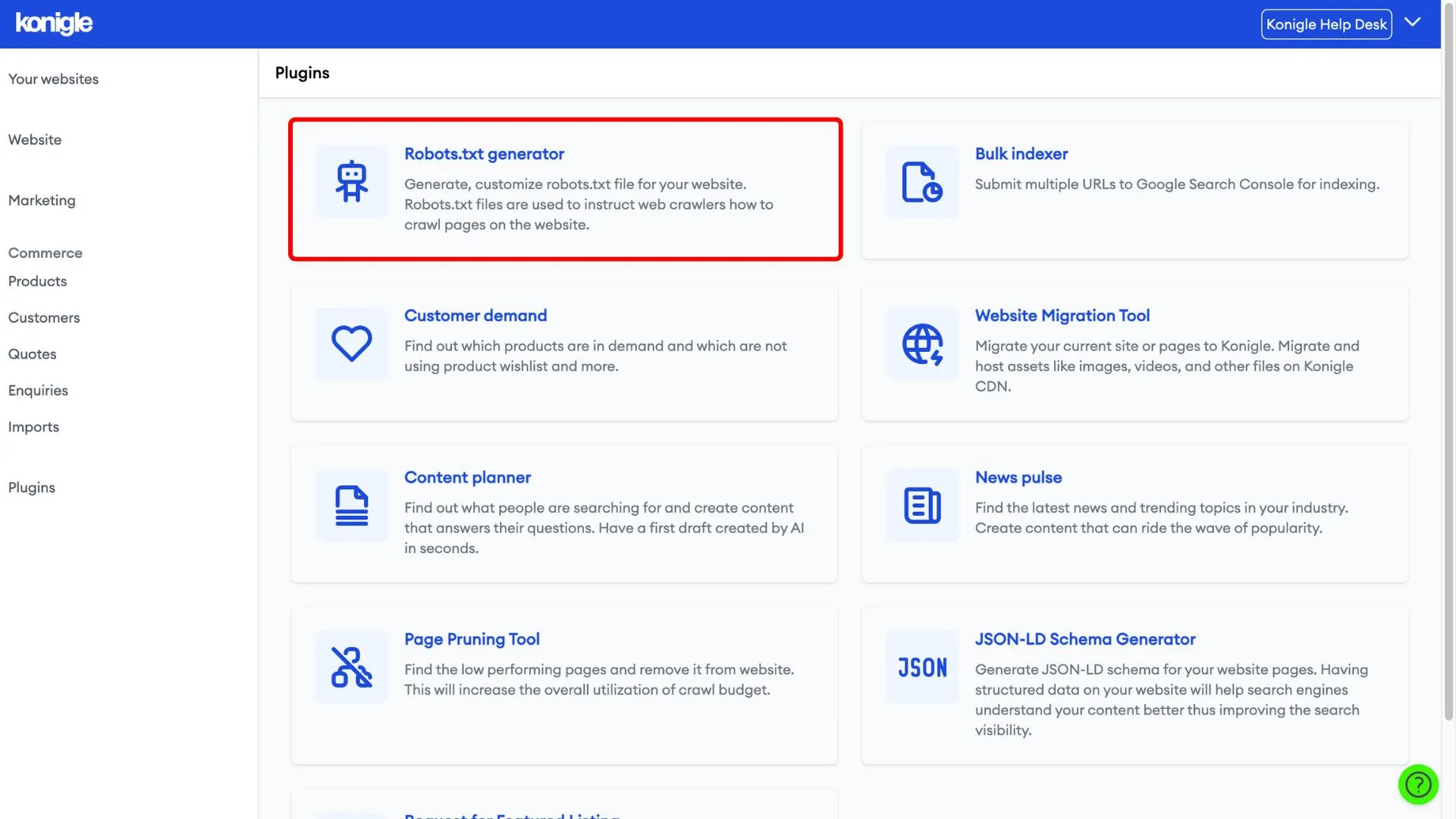

2. Go to the Robot.txt Generator plugin.

Generate a robots.txt file

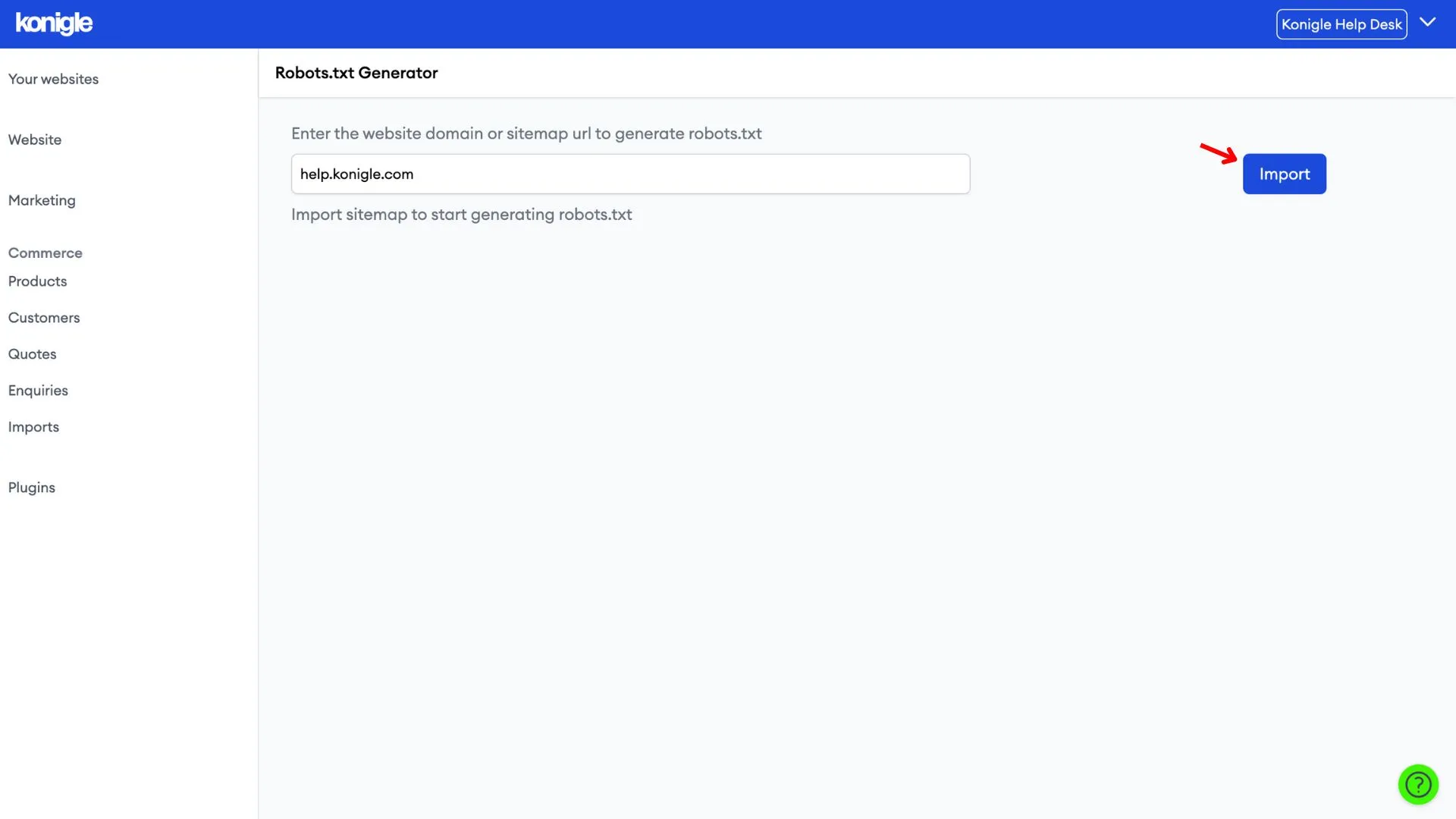

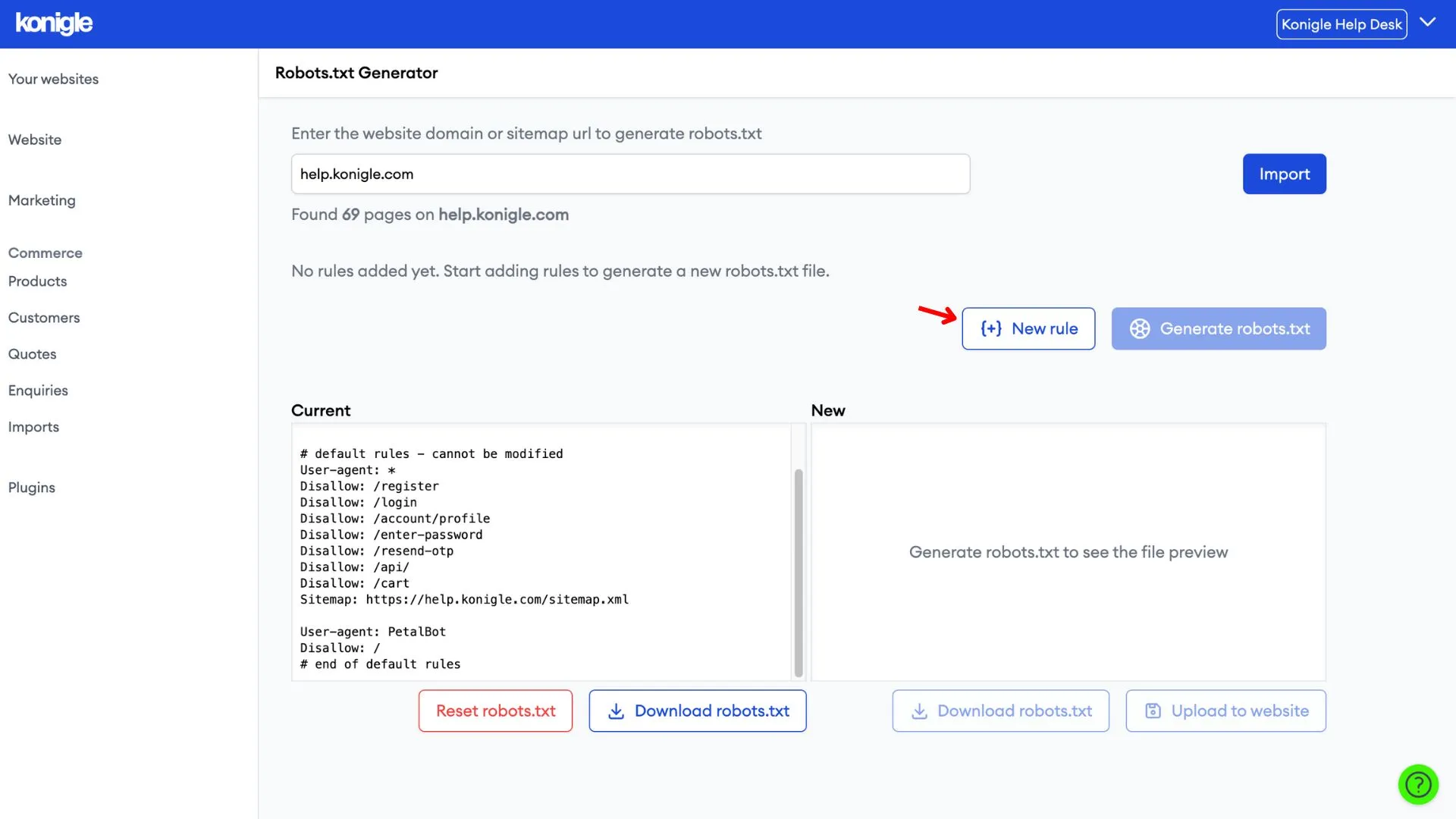

1. Import your website's robots.txt file.

2. Add new rules to your pre-existing robots.txt file. Note: The pre-existing file cannot be modified.

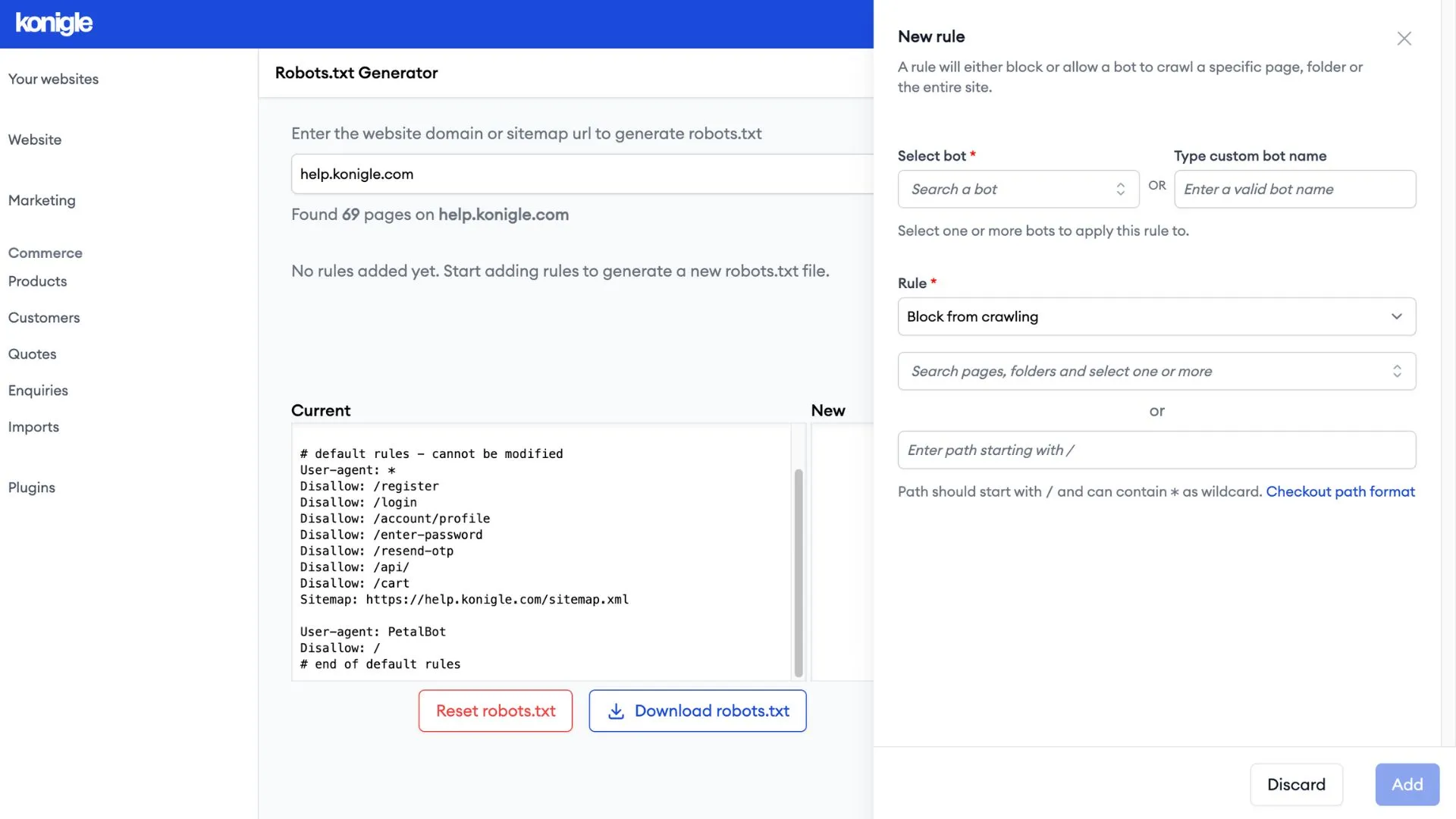

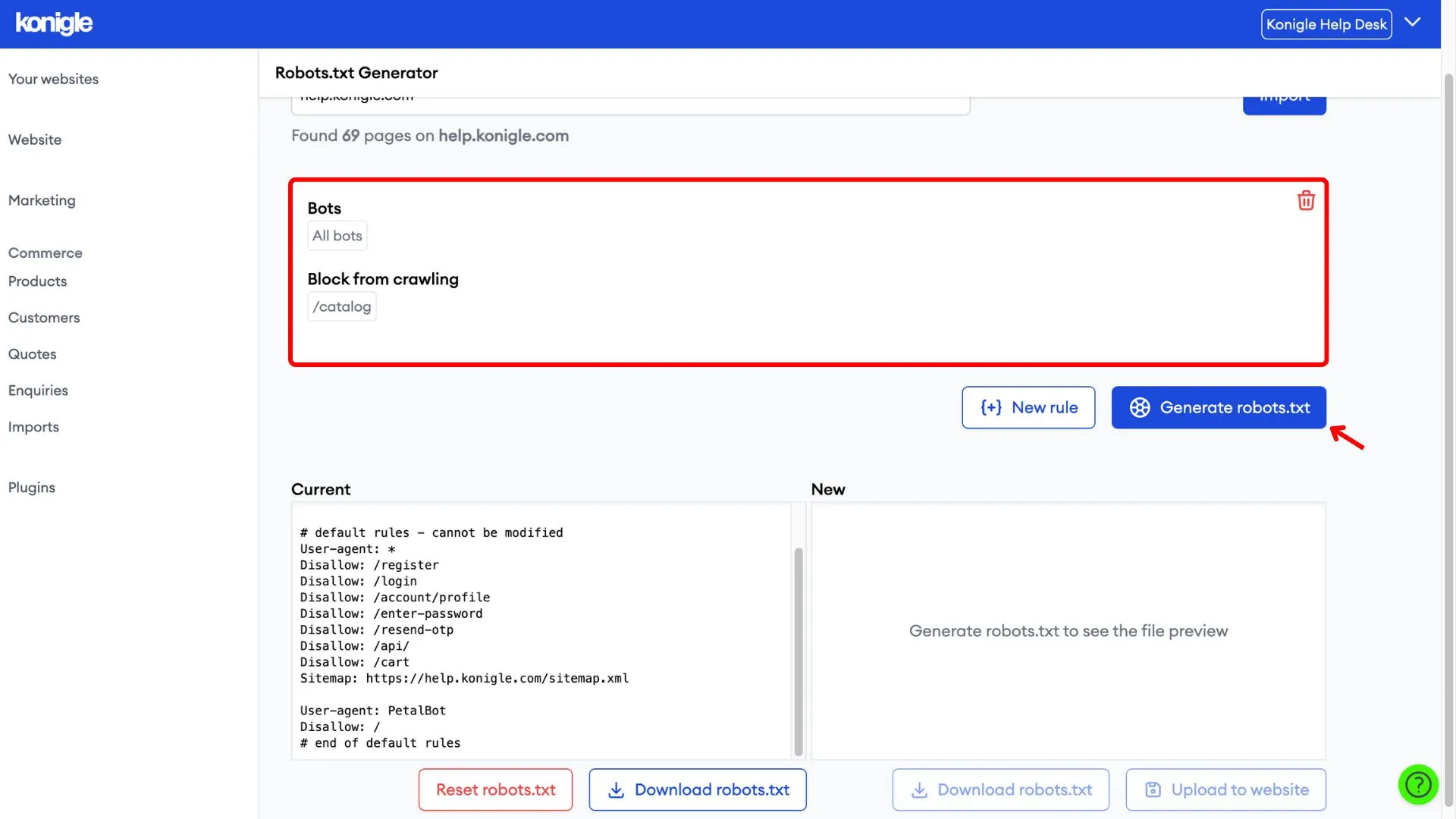

3. Configure the rule by selecting which pages you wish to allow or block selected bots from crawling. Add it when you're done.

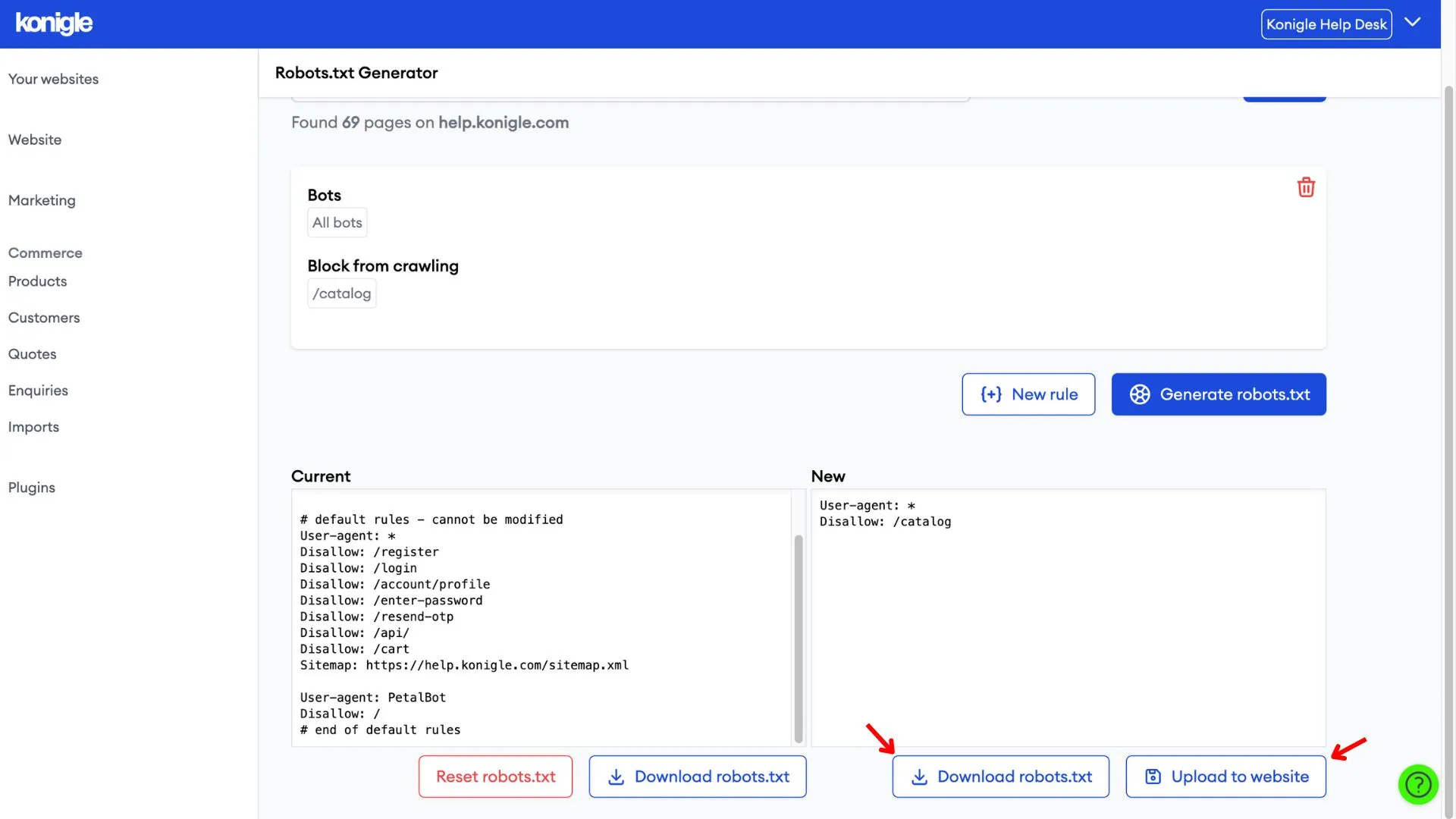

4. Generate a new robots.txt with the new rules.

5. You can choose to either download the robots.txt or upload it straight to your website.

Frequently Asked Questions (FAQs)

Does my website need a robots.txt file?

No, your website doesn't necessarily need a robots.txt file. Search engines will try to crawl your site even if the file is missing. However, a robots.txt file gives you more control over what gets crawled, which can be helpful for some website owners.

What is robot txt files and how to implement it?

Robots.txt is a text file on your website that tells search engine crawlers which pages they can access and index. It helps you control how search engines explore your website.

Here's a quick guide to implementing it:

- Use the robots.txt generator tool to create a robots.txt file for your website, specifying which pages to allow or disallow crawling.

- Upload the robots.txt file to the root directory of your website.

How do I read robots.txt from a website?

You can read robots.txt from a website by importing using the free robots.txt generator public tool.